Configure Advanced Policies/Features and Verify Network Virtualization Implementation

Today’s VCP6-DCV topic Objective 2.1: Configure Advanced Policies/Features and Verify Network Virtualization Implementation is the core of virtualization networking. Together with 2 other chapters it covers all vSphere 6 networking.

You can follow the VCP6-DCV study guide built through my VCP6-DCV page. When finished, there will be a PDF version which will get its proper formatting for better reading experience. We’re more than half way through right now, and the work continues. Let’s kick on with this chapter!

vSphere Knowledge

- Identify vSphere Distributed Switch (vDS) capabilities

- Create/Delete a vSphere Distributed Switch

- Add/Remove ESXi hosts from a vSphere Distributed Switch

- Add/Configure/Remove dvPort groups

- Add/Remove uplink adapters to dvUplink groups

- Configure vSphere Distributed Switch general and dvPort group settings

- Create/Configure/Remove virtual adapters

- Migrate virtual machines to/from a vSphere Distributed Switch

- Configure LACP on Uplink portgroups

- Describe vDS Security Polices/Settings

- Configure dvPort group blocking policies

- Configure load balancing and failover policies

- Configure VLAN/PVLAN settings

- Configure traffic shaping policies

- Enable TCP Segmentation Offload support for a virtual machine

- Enable Jumbo Frames support on appropriate components

- Determine appropriate VLAN configuration for a vSphere implementation

—————————————————————————————————–

Identify vSphere Distributed Switch (vDS) capabilities

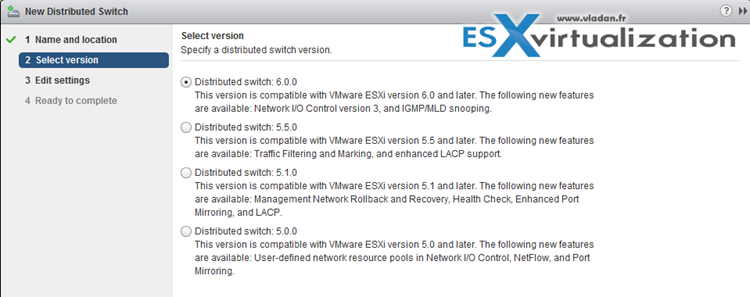

VMware vSphere Distributed Switch (vDS) is in its version 6 and packed in more feature than in previous relase of VDS. If you’re upgrading you shall upgrade vDS to version 6.0 as well to benefit the latest features.

The vDS separates the data plane and management plane to separate them. The data plane resides on ESXi host, but the management plane moves to vCenter server. The data plane is called host proxy switch.

- NetFlow Support – Netflow is used for troubleshooting, it picks a configurable number of samples of network traffic for monitoring..

- PVLAN Support – PVLAN is able to get more from VLANs (which are limited in numbers) and you can use these PVLANS to further segregate your traffic and increase security. (Note: Enterprise plus licensing required! Check my detailed post on PVLANs here.

- Ingress and egress traffic shaping – Inbound/outbound traffic shaping, which allows you throttle bandwidth to the switch.

- VM Port Blocking – can block VM ports in case of viruses or troubleshooting…

- Load Based Teaming – LBT is an additional load balancing that works off the amount of traffic a queue is sending

- Central Management across cluster – vDS can create the config once and push it to all attached hosts…so you don’t have to go to each host one-by-one…

- Per Port Policy Settings – It’s possible to override policies at a port level which gives you more controll

- Port State Monitoring – This feature allows each port to be monitored separately from other ports

- LLDP – Allows supports for link layer discovery protocol

- Network IO Control – possibility to set priority on port groups and reserve bandwidth for VMs connected to this port group. Check the detailed chapter on NIOC here: Objective 2.2: Configure Network I/O Control (NIOC)

- LACP Support – LACP (Link aggregation control protocol) ability to aggregate links together into a single link (your physical switch must support it!)

- Backup/Restore Network config – It’s possible to backup/restore network config at the vDS level (Not new! It’s here since 5.1! – save and restore network config…)

- Port Mirroring – Allows monitoring and can send all traffic from one port to another

- Stats stays at the VM level – statistics move with the VM even after vMotion.

Create/Delete a vSphere Distributed Switch

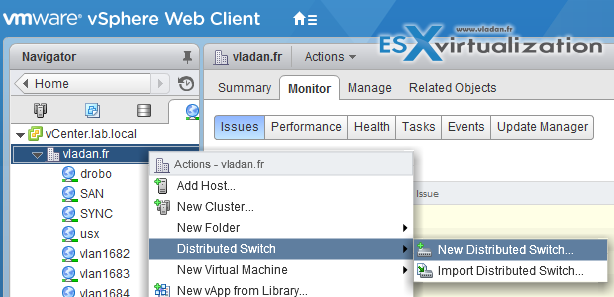

Create a vSphere vDS – Networking Guide on p27. vSphere Web client > Networking > Rigt click datacenter > Distributed switch > New Distributed switch

Put a name and then select the version…

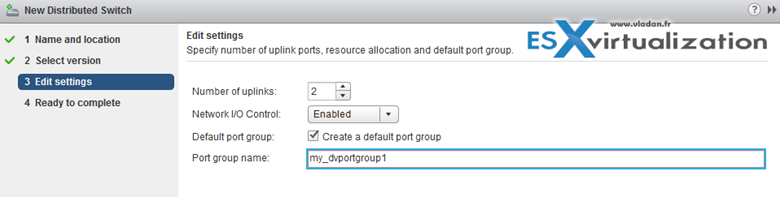

Select how many uplinks, specify if you want to enable Network I/O control and rename the default port group (not mandatory)…

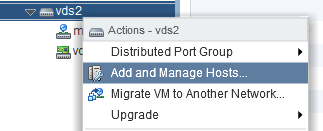

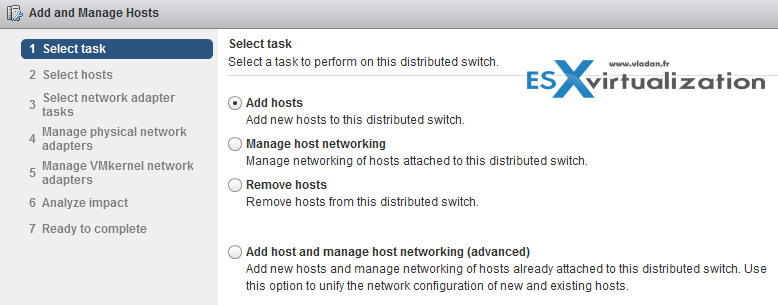

Add/Remove ESXi hosts from a vSphere Distributed Switch

You can add/remove ESXi hosts from vDS to manage their networking (or not) from a central location. The good thing is that you can analyse impact before breaking a connectivity, so you’re able to see the impact. The impact can be as follows:

- No Impact

- Important impact

- Critical Impact

Next…

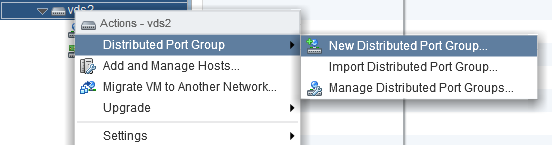

Add/Configure/Remove dvPort groups

Right click on the vDS > New Distributed Port Group.

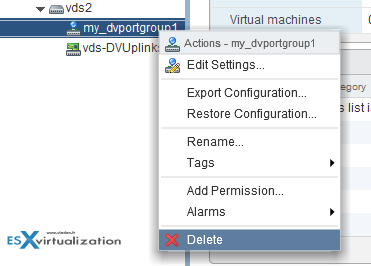

To remove a port group. Simple. Right click on the port group > delete…

Add/Remove uplink adapters to dvUplink groups

Again, right click is your friend… -:)

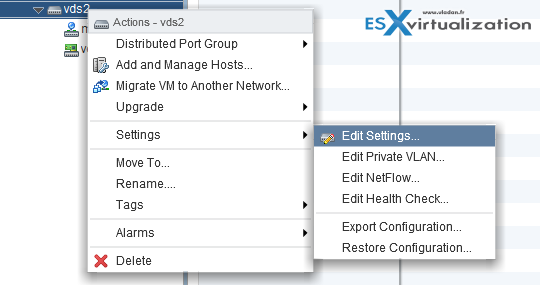

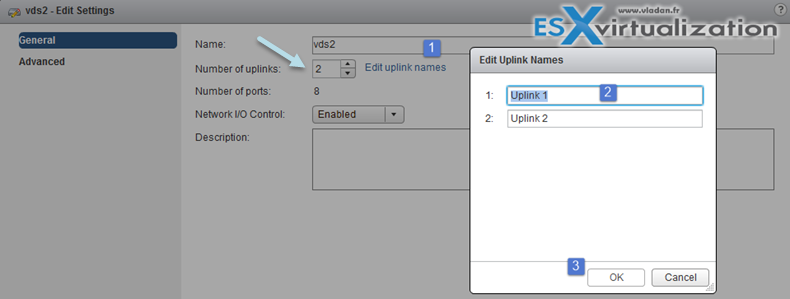

If you want to add/remove (increase or decrease) number of uplinks you can do so by going to the properties of the vDS.

Right click on the vDS > Edit settings

And on the next screen you can do that… Note that at the same time you can give a different names to your uplinks…

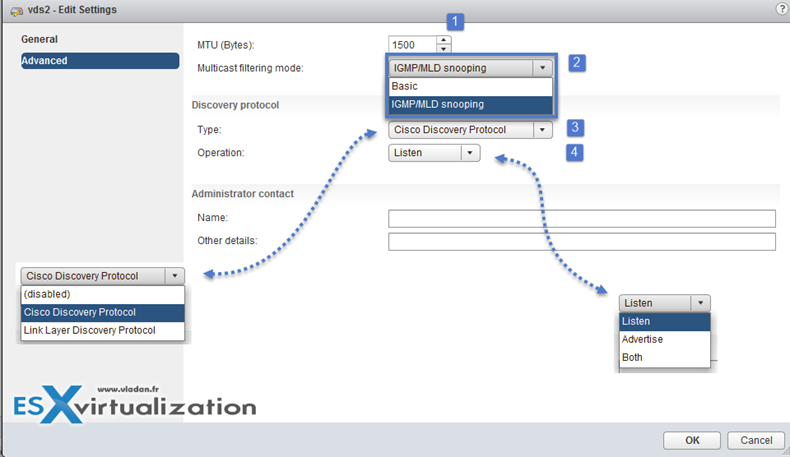

Configure vSphere Distributed Switch general and dvPort group settings

General properties of vDS can be reached via Right click on the vDS > Settings > Edit settings

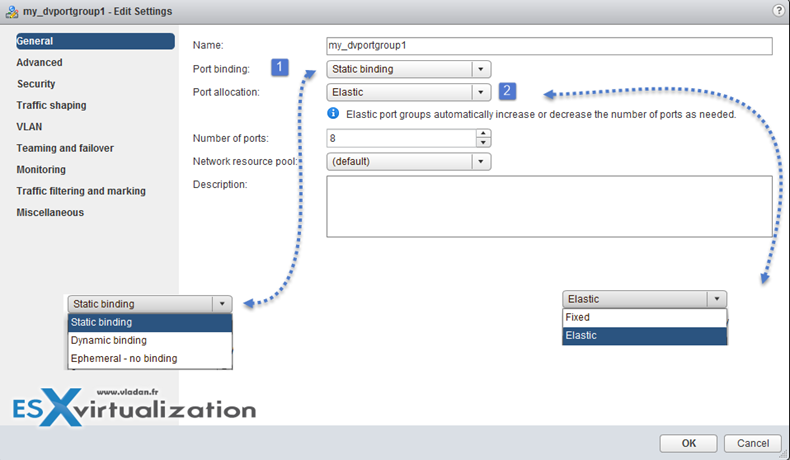

Port binding properties (at the dvPortGroup level – Right click port group > Edit Settings)

- Static binding – Assigns a port to a VM when the virtual machine is connected to the PortGroup.

- Dynamic binding – it’s kind of deprecated. For best performance use static binding

- Ephemeral – no binding

Port allocation:

- Elastic – Increase or decreas on-the-fly….. 8 at the beginning (default). Increases by 8 when needed.

- Fixed – There is 128 by default.

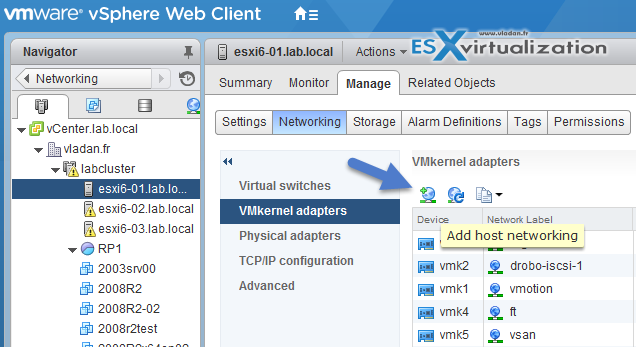

Create/Configure/Remove virtual adapters

VMkernel adapters can be add/removed at the Networking level

vSphere Web Client > Host and Clusters > Select Host > Manage > Networking > VMkernel adapters

Different VMkernel Services, like :

- vMotion traffic

- Provisioning traffic

- Fault Tolerance (FT) traffic

- Management traffic

- vSphere Replication traffic

- vSphere Replication NFC traffic

- VSAN traffic

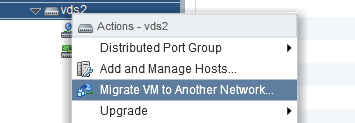

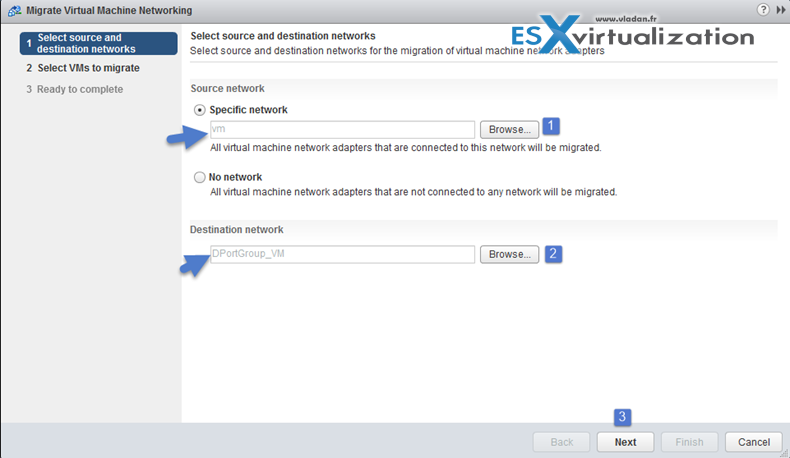

Migrate virtual machines to/from a vSphere Distributed Switch

Migrate VMs to vDS. Right click vDS > Migrate VM to another network

Make sure that you previously created a distributed port group with the same VLAN that the current VM is running… (in my case the VMs run at VLAN 7)

Pick a VM…

Done!

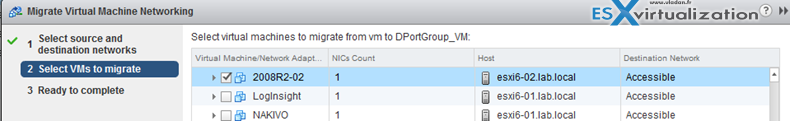

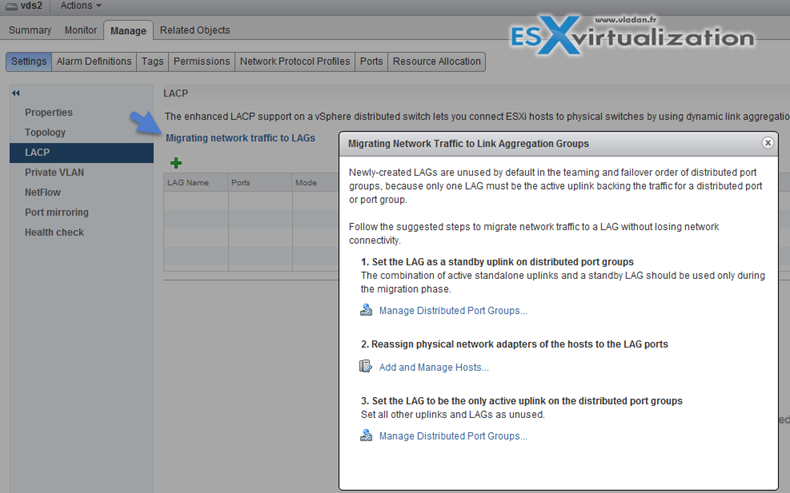

Configure LACP on Uplink portgroups

LACP can be found in the Networking guide on p.65.

vSphere Web Client > Networking > vDS > Manage > Settings > LACP

Create Link Aggregation Groups (LAG)

LAG Mode can be:

- Passive – where the LAG ports respond to LACP packets they receive but do not initiate LACP negotiations.

- Active – where LAG ports are in active mode and they initiate negotiations with LACP Port Channel.

LAG load balancing mode (LNB mode):

- Source and destination IP address, TCP/UDP port and VLAN

- Source and destination IP address and VLAN

- Source and destination MAC address

- Source and destination TCP/UDP port

- Source port ID

- VLAN

Note that you must configure the LNB hashing same way on both virtual and physical switch, at the LACP port channel level.

Migrate Network Traffic to Link Aggregation Groups (LAG)

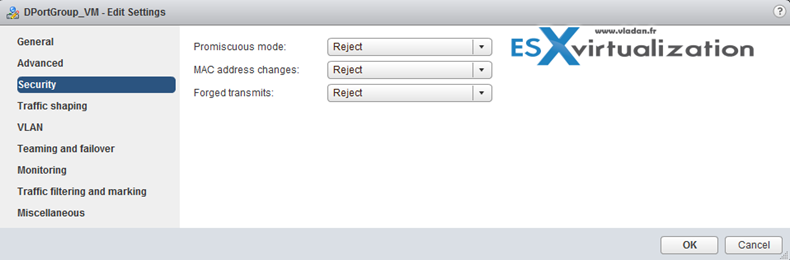

Describe vDS Security Polices/Settings

Note that those security policies exists also on standard switches.

There are 3 different network security policies:

- Promiscuous mode – Reject is by default. In case you set to Accept > the guest OS will receive all traffic observed on the connected vSwitch or PortGroup.

- MAC address changes – Reject is by default. In case you set to Accept > then the host will accepts requests to change the effective MAC address to a different address than the initial MAC address.

- Forged transmits – Reject is by default. In case you set to Accept > then the host does not compare source and effective MAC addresses transmitted from a virtual machine.

Network security policies can be set on each vDS PortGroup.

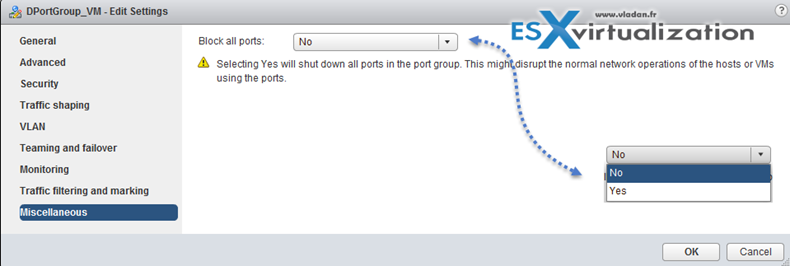

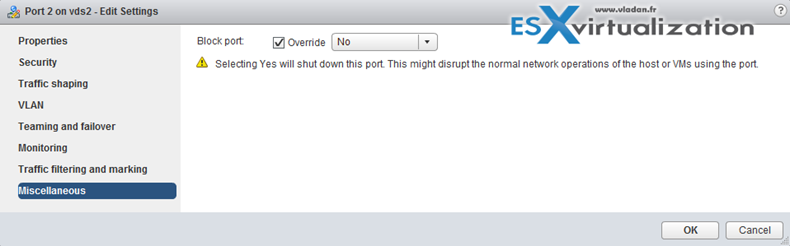

Configure dvPort group blocking policies

Port blocking can be enabled on a port group to block all ports on the port group

or you can configure the vDS or uplink to be blocked at the vDS level…

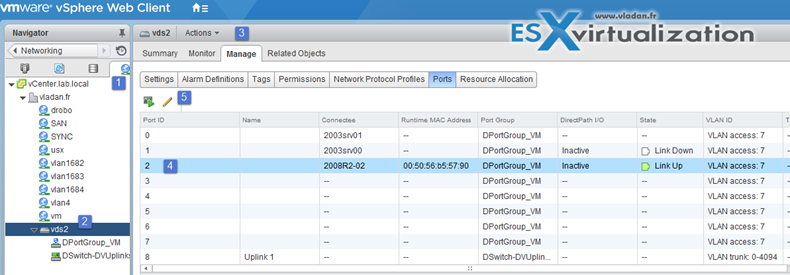

vSphere Web Client > Networking > vDS > Manage > Ports

And then select the port > edit settings > Miscellaneous > Override check box > set Block port to yes.

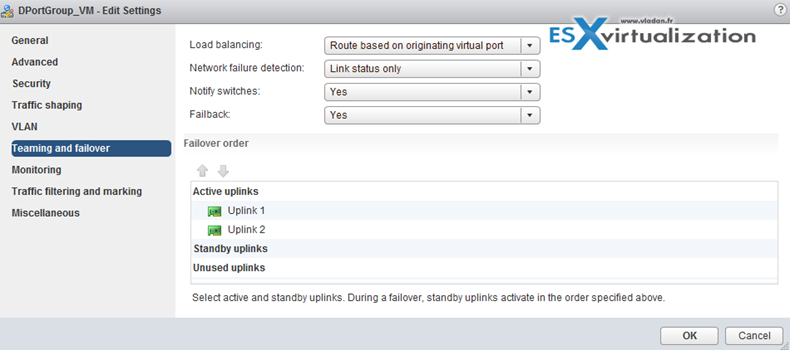

Configure load balancing and failover policies

Load balancing algos can be found in the Networking Guide on p. 91.

vDS load balancing (LNB):

- Route based on IP hash – The virtual switch selects uplinks for virtual machines based on the source and destination IP address of each packet.

- Route based on source MAC hash – The virtual switch selects an uplink for a virtual machine based on the virtual machine MAC address. To calculate an uplink for a virtual machine, the virtual switch uses the virtual machine MAC address and the number of uplinks in the NIC team.

- Route based on originating virtual port – Each virtual machine running on an ESXi host has an associated virtual port ID on the virtual switch. To calculate an uplink for a virtual machine, the virtual switch uses the virtual machine port ID and the number of uplinks in the NIC team. After the virtual switch selects an uplink for a virtual machine, it always forwards traffic through the same uplink for this virtual machine as long as the machine runs on the same port. The virtual switch calculates uplinks for virtual machines only once, unless uplinks are added or removed from the NIC team.

- Use explicit failover order – No actual load balancing is available with this policy. The virtual switch always uses the uplink that stands first in the list of Active adapters from the failover order and that passes failover detection criteria. If no uplinks in the Active list are available, the virtual switch uses the uplinks from the Standby list.

- Route based on physical NIC load (Only available on vDS) – based on Route Based on Originating Virtual Port, where the virtual switch checks the actual load of the uplinks and takes steps to reduce it on overloaded uplinks. Available only for vSphere Distributed Switch. The distributed switch calculates uplinks for virtual machines by taking their port ID and the number of uplinks in the NIC team. The distributed switch tests the uplinks every 30 seconds, and if their load exceeds 75 percent of usage, the port ID of the virtual machine with the highest I/O is moved to a different uplink.

Virtual switch failover order:

- Active uplinks

- Standby uplinks

- Unused uplinks

Configure VLAN/PVLAN settings

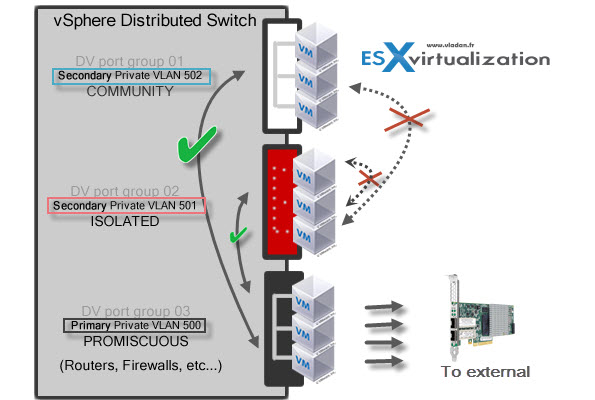

private VLANs allows further segmentation and creation of private groups inside each of the VLAN. By using private VLANs (PVLANs) you splitting the broadcast domain into multiple isolated broadcast “subdomains”.

Private VLANs needs to be configured at the physical switch level (the switch must support PVLANs) and also on the VMware vSphere distributed switch. (Enterprise Plus is required). I’ts more expensive and takes a bit more work to setup.

There are different types of PVLANs:

Primary

- Promiscuous Primary VLAN – Imagine this VLAN as a kind of a router. All packets from the secondary VLANS go through this VLAN. Packets which also goes downstream and so this type of VLAN is used to forward packets downstream to all Secondary VLANs.

Secondary

- Isolated (Secondary) – VMs can communicate with other devices on the Promiscuous VLAN but not with other VMs on the Isolated VLAN.

- Community (Secondary) – VMs can communicate with other VMs on Promiscuous and also w those on the same community VLAN.

The graphics shows it all…

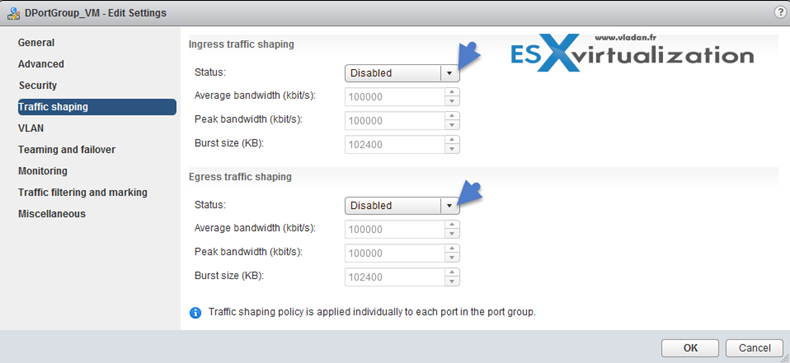

Configure traffic shaping policies

Networking Guide p.105

vDS supports both ingress and egress traffic shaping.

Traffic shaping policy is applied to each port in the port group. You can Enable or Disable the Ingress or egress traffic

- Average bandwidth in kbits (Kb) per second – Establishes the number of bits per second to allow across a port, averagedover time. This number is the allowed average load.

- Peak bandwidth in kbits (Kb) per second – Maximum number of bits per second to allow across a port when it is sending or receiving a burst of traffic. This number limits the bandwidth that a port uses when it is using its burst bonus.

- Burst size in kbytes (KB) per second – Maximum number of bytes to allow in a burst. If set, a port might gain a burst bonus if it does not use all its allocated bandwidth. When the port needs more bandwidth than specified by the average bandwidth, it might be allowed to temporarily transmit data at a higher speed if a burst bonus is available

Enable TCP Segmentation Offload support for a virtual machine

Use TCP Segmentation Offload (TSO) in VMkernel network adapters and virtual machines to improve the network performance in workloads that have severe latency requirements.

When TSO is enabled, the network adapter divides larger data chunks into TCP segments instead of the CPU. The VMkernel and the guest operating system can use more CPU cycles to run

applications.

applications.

By default, TSO is enabled in the VMkernel of the ESXi host , and in the VMXNET 2 and VMXNET 3 virtual machine adapters

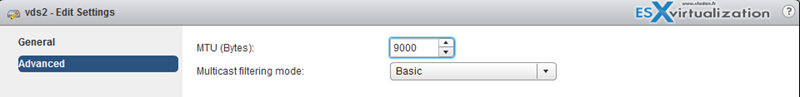

Enable Jumbo Frames support on appropriate components

There are many places where you can enable Jumbo frames and you should enable jumbo frames end-to-end. If not the performance will not increase, but rather the opposite. Jumbo Frames can be enabled on a vSwitch, vDS, and VMkernel Adapter.

Jumbo frames maximum value = 9000.

Determine appropriate VLAN configuration for a vSphere implementation

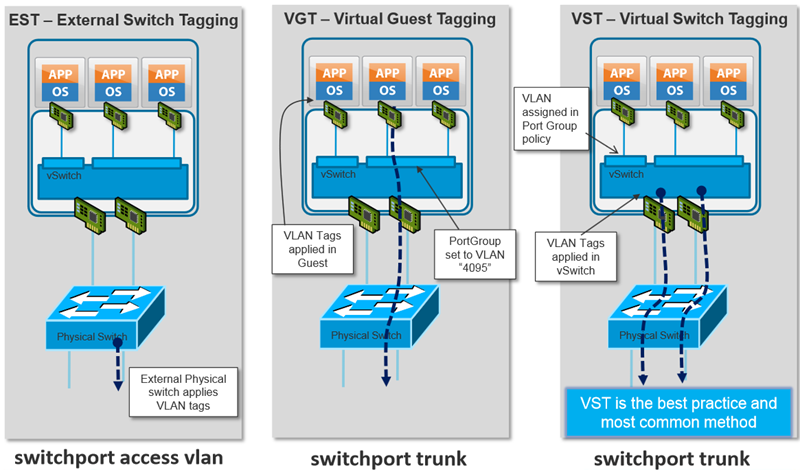

There are three main places or three different ways to tag frames in vSphere.

- External Switch Tagging (EST) – VLAN ID is set to None or 0 and it is the physical switch that does the VLAN tagging.

- Virtual Switch Tagging (VST) – VLAN set between 1 and 4094 and the virtual switch does the VLAN tagging.

- Virtual Guest Tagging (VGT) – the taggings happens in the guest OS. VLAN set to 4095 (vSwitch) or VLAN trunking on vDS.

The best to understand this is I guess this document from VMware called Best Practices for Virtual Networking and from there I also “borrowed” this screenshot…

Networking is big chapter. If I missed something, just comment or email me your suggestion. Thanks…

vSphere documentation tools

No comments:

Post a Comment